Will Computers Make Better Music Than Humans?

Today we use computers to aid us in music composition and production, but still retain much of the creative control over the outcome. Sometimes we play actual musical instruments and use computers to mix it all up, add effects, and balance the sound. Other times we use sound synthesizers and sequencers that allow us to fine tune the composition to utmost precision, and with relative ease, but we still control the outcome.

Today we use computers to aid us in music composition and production, but still retain much of the creative control over the outcome. Sometimes we play actual musical instruments and use computers to mix it all up, add effects, and balance the sound. Other times we use sound synthesizers and sequencers that allow us to fine tune the composition to utmost precision, and with relative ease, but we still control the outcome.

What if the role of a human musician was drastically diminished, or completely eliminated? Could computers make music all by themselves, and could this music actually sound good to our ears? The answer is yes. It was already proven by David Cope, whom created a virtual composer he calls Emily Howell, software which can compose music all by itself.

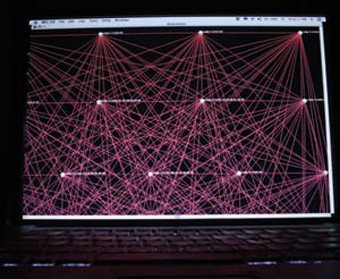

The way it actually works is complicated, but the important thing to note is that it doesn’t technically do anything less than a human does when composing music. It just does it in a different way. Whereas humans genuinely feel emotion, for example, a software program such as this can’t. However, if the impact of emotion really cannot be entirely quantified in a replacement variable within a computer program, then we could simply see it as a random factor, and computers can do randomness too.

David Cope argues that all art is derivative from our past experiences, including those of artistic expressions created by others before us. What we do when we create art is process all of these past experiences and recombine them into a version of our own, something we tend to call “original art”. This shouldn’t really diminish its value. It’s simply what it is. Everything in nature is derivative in some sense due to causality. What came before inevitably determines what will come after. Why should humans be exempt from this process?

The fact remains that no two human beings experience the world from the same vantage point throughout their lives. It is simply impossible since no two humans can occupy the same spot in time and space simultaneously. This, combined with the complexity of the human mind and its development, gives each of us enough claim to uniqueness. This is why I don’t think that computer composers necessarily deny or threaten the idea that a human artist cannot create amazing art with an element of true originality.

David Cope’s Emily Howell, however, could just be the beginning of what machines can do. What if machines could actually do better than humans? What if we combine an advanced version of software such as Emily Howell, with something like brain computer interfaces, and have it literally produce music in real time based on what it reads from a human mind.

In other words, what if in the future we could have devices which scan our brains and in real time create and play precisely the music that we would enjoy the most in that specific moment? Perhaps we could also program them to, instead of matching our moods, play the precise tunes necessary to *get* us into a specific mood. Possibilities are perhaps frighteningly awesome.

Human musicians are capable of doing this to a limited extent by attempting to read the feedback of the crowd when playing live, and adjusting the tune to create the most appreciation and enjoyment. However, can they match the precision of a computer in this regard? And if they can’t what might the existence of such a device do for the value of human composers?

I for one think humans won’t become obsolete. For one thing many people might simply go with the human bias and continue to prefer something created by real feeling flesh and blood minds. Besides that, however, this is precisely why the transhumanist movement predicts and prescribes the advancement of the human capacities to match those of the machines when they begin exceeding our capacities, but that’s a different topic.

Comments - No Responses to “Will Computers Make Better Music Than Humans?”

Sorry but comments are closed at this time.